Reinforcement learning, a type of machine learning, can tackle a wide range of complex issues. Some of the applications include autonomous driving, robotics, trading strategies, healthcare treatment policy, warehouse management, strategic game theory, and many others. The list goes on long enough that one might be tempted to see the technology as magic. If you have big challenges in need of novel solutions, then this post is for you.

Arthur C. Clark penned three adages in the ’60s and ’70s that became known as his three laws. If you read many tech blogs, you probably know the third law, which reads:

“Any sufficiently advanced technology is indistinguishable from magic.”

The author of said blog is usually quoting this line while standing in awe before an impressive new tool or research development. And rightly so; there are many technological marvels these days. Here, however, we want to take the line with a different inflection:

“At the heart of some seemingly magical feats lies technology – developed, practiced, and understood.”

Reinforcement learning is one such case. At the core of this technology is a simple objective: given a set of observations, produce actions that return the greatest reward. Depending on the implementation, the reward can be short-term, long-term, or cooperative with other trained reinforcement learning models. From this basic concept we get a wide array of applications:

In each of these examples, the computer agent(s) is the core code that perceives the environment, takes action, and learns from it. A given agent will start knowing very little about tasks set out before it. It will have a list of available actions it is allowed to take, a set of things in the environment to pay attention to, and a reward function it is attempting to maximize. As the agent explores and experiences the environment, the observations and actions associated with each experience generate rewards to facilitate learning. There may be a host of tricks employed to optimize learning speeds, focus on growth over near-sighted solutions, generalize learning, and so on, but they all share a common learning cycle:

In each of these examples, the computer agent(s) is the core code that perceives the environment, takes action, and learns from it. A given agent will start knowing very little about tasks set out before it. It will have a list of available actions it is allowed to take, a set of things in the environment to pay attention to, and a reward function it is attempting to maximize. As the agent explores and experiences the environment, the observations and actions associated with each experience generate rewards to facilitate learning. There may be a host of tricks employed to optimize learning speeds, focus on growth over near-sighted solutions, generalize learning, and so on, but they all share a common learning cycle:

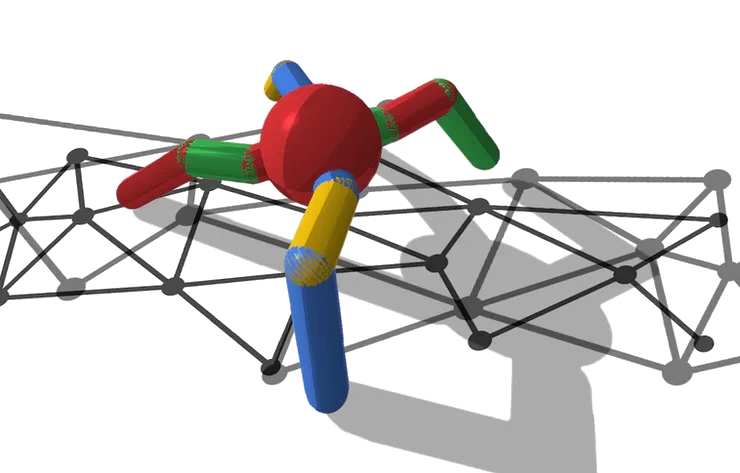

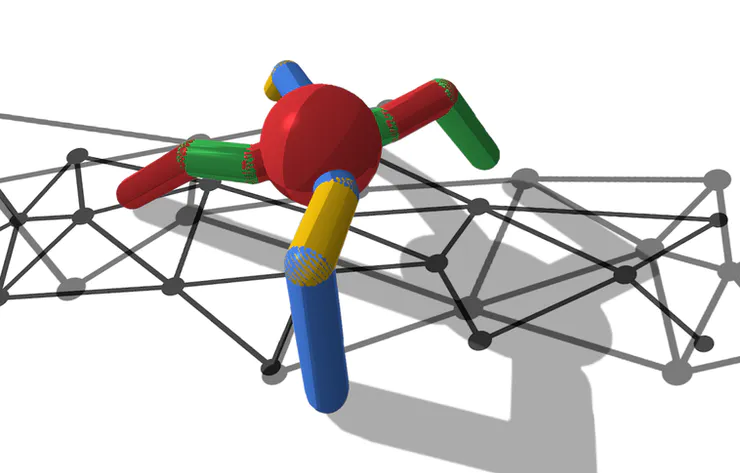

As an example, imagine our robot (agent) from the picture above is tasked with picking up toys in a child’s room; we’ll say the robot knows how to move and pick up objects, but doesn’t yet know what the toys are or what should be done with them. The robot will likely progress through learning like this:

Depending on the complexity of the environment and the agent’s goals, the agent may learn everything it needs to know in relatively short order. In more complex cases, the agent may need much more experience to come to reasonable solutions. For example, in the OpenAI hide and seek study mentioned above, they trained on hundreds of millions of episodes—billions of time-steps. In the larger, more complex cases, the architecture of the learning pipeline becomes significant itself.

To be clear, this is not an exhaustive search where the computer simply brute-forces every possible action/environment combination and returns the best it found—that is possible with certain small-scale scenarios, but would be dauntingly expensive, and often impossible, for large scenarios requiring exploration of countless (infinite) combinations. Instead, successful reinforcement learning algorithms use various techniques to learn optimal action policies in comparatively short order. In other words, they learn to fill the gaps between what they have experienced and what they have not, a.k.a inference.

So far you’ve learned some fundamental concepts of reinforcement learning. If you would like to work toward a deeper understanding, we are preparing a few tutorials to help you make your own models and gain practical insight into reinforcement learning. By following the tutorials, you will help a robot ant learn to walk and accomplish tasks. We hope these help you feel empowered to implement reinforcement learning on your own.